- A P-value ≤0.05 does not equate to scientific or policy importance.

- The P-value ≤0.05 has been called an arbitrary threshold, and said not to equate with statistical significance.

- The focus on statistical significance has caused researchers to troll the data for findings that provide a P-value ≤0.05.

- Assigning statistical significance to a P-value ≤0.05 in medicine has been said to have cause harm to patients with serious diseases.

- P-values do not measure the probability that the studied hypothesis is true, or the probability that the data were produced by random chance alone.

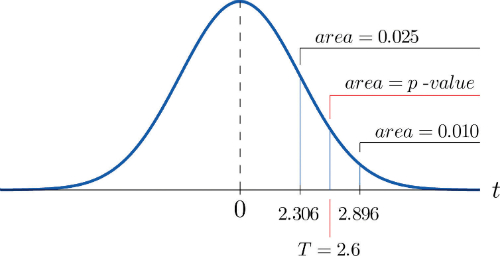

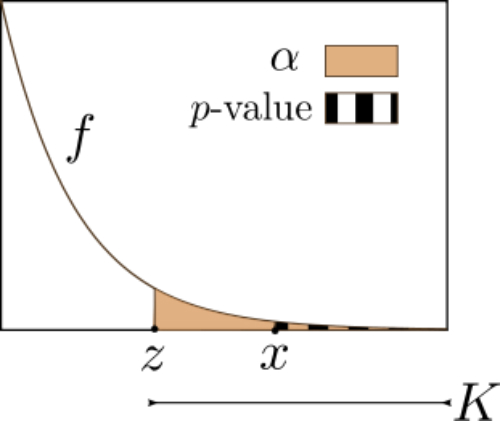

In much of medical research, P-values (Figure 1) have become a key factor in deciding which findings are statistically significant and which studies are worthy of publication. As a result, research that produces P-values ≤0.05 are more likely to be published, while studies with greater or equal scientific importance may never be published, and may never be seen by the medical community. The P-value ≤0.05 was called “an arbitrary threshold” in a recent article published on FiveThirtyEight.com.

FiveThirtyEight.com is a website overseen by Nate Silver, a statistician who was one of few to accurately predict the outcome of the 2008 U.S. presidential race, and to remain accurate in nearly every step of the campaign. Mr. Silver’s accuracy has given him a great deal of clout in statistical matters of all sorts, from sports to politics to science.

American Statistical Association Consensus Committee

The results can be devastating, said Donald Berry, a biostatistician at the University of Texas MD Anderson Cancer Center. “Patients with serious diseases have been harmed,” he wrote in a recent commentary. “Researchers have chased wild geese, finding too often that statistically significant conclusions could not be reproduced.” Faulty statistical conclusions, he added, have real economic consequences.

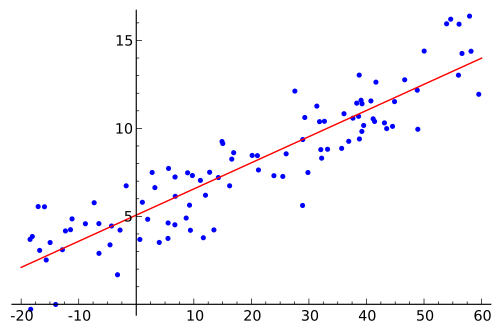

“The P-value was never intended to be a substitute for scientific reasoning,” wrote Ron Wasserstein, in a press release. Mr. Wasserstein is Executive Director of the American Statistical Association (ASA). Mr. Wasserstein co-chaired a consensus committee that examined statistical methods in science, including P-values. (Figure 2.)

to an analysis based on P-value.

Even the Supreme Court has weighed in, unanimously ruling in 2011 that statistical significance does not automatically equate to scientific or policy importance.

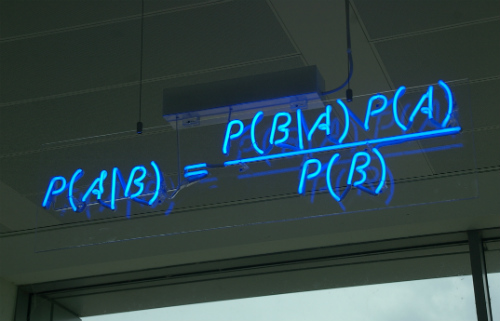

Scientific and medical statisticians, and members of the consensus committee seem to agree with Mr. Wasserstein on this point. “But statisticians have deep philosophical differences about the proper way to approach inference and statistics, and ‘this was taken as a battleground for those different views,’ said Steven Goodman, Co-Director of the Meta-Research Innovation Center at Stanford University [METRICS]. Much of the dispute centered around technical arguments over frequentist versus Bayesian methods and possible alternatives or supplements to P-values (Figure 3). ‘There were huge differences, including profoundly different views about the core problems and practices in need of reform,” Mr. Goodman said. “People were apoplectic over it,’” according to the article on FiveThirtyEight.com.

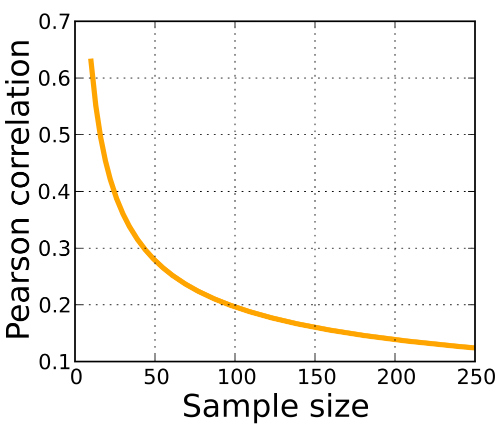

Furthermore, according to FiveThirtyEight.com, “the group debated and discussed the issues for more than a year before finally producing a statement they could all sign. They released that consensus statement on late last year, along with 20 additional commentaries from members of the committee. The ASA statement is intended to address the misuse of P-values and promote a better understanding of them among researchers and science writers, and it marks the first time the association has taken an official position on a matter of statistical practice.” (Figure 4.)

Consensus Committee Mission and Findings

The consensus statement outlines some fundamental principles regarding P-values. One of those was agreeing on a definition of the P-value that all members of the scientific community could understand and use in a meaningful way. The P-value has been defined informally as the probability that the findings of an experimental trial were more likely to have been produced by the experimental method than they are a random finding. However, the technical definition of a P-value is “the probability of getting results at least as extreme as the ones you observed, given that the null hypothesis is correct,” Christie Aschwanden explained in her FiveThirtyEight.com article.

One of the most important points agreed among the committee is that the P-value does not reveal whether the experimental hypothesis is correct. Instead, it’s the probability of your data given your hypothesis. A common misconception among nonstatisticians is that P-values can be used to discern the probability that a result occurred by chance. This interpretation is dead wrong, but you see it again and again and again and again. The P-value only tells you something about the probability of seeing your results given a particular hypothetical explanation—it cannot tell you the probability that the results are true or whether they’re due to random chance, the consensus statement notes.

In fact, the ASA statement’s Principle No. 2 can be summed as “P-values do not measure the probability that the studied hypothesis is true, or the probability that the data were produced by random chance alone.” (Figure 5.)

“Nor can a P-value tell you the size of an effect, the strength of the evidence or the importance of a result. Yet despite all these limitations, P-values are often used as a way to separate true findings from spurious ones, and that creates perverse incentives,” according to the FiveThirtyEight.com article.

The concern among statisticians is that, in many published articles, the goal has shifted from proving or disproving the hypothesis—from seeking the truth—to obtaining a P-value of ≤0.05. This may cause researchers to seek aspects of the data, and to keep applying different analyses until they find data that can be assigned an appropriate P-value.

Members of the ASA committee argued in commentaries that P-values in and of themselves are not the core problem. Instead “failing to adjust them for cherry picking, multiple testing, post-data subgroups and other biasing selection effects” is at fault wrote Deborah Mayo, a philosopher of statistics at Virginia Tech. “When P-values are treated as a way to sort results into bins labeled significant or not significant, the vast efforts to collect and analyze data are degraded into mere labels, said Kenneth Rothman, an epidemiologist at Boston University,” according to the FiveThirtyEight.com report.

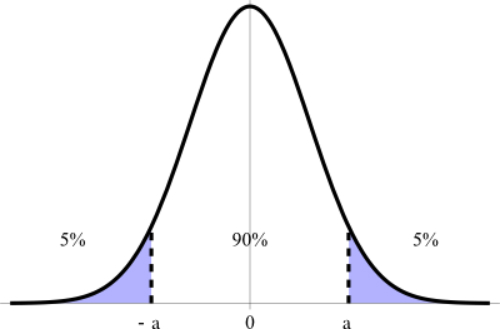

The commentaries published with the ASA statement presented a range of ideas about better ways to use statistics in science. Some members argued that researchers should instead rely on other measures, such as confidence intervals or Bayesian analyses. “The solution is not to reform P-values or to replace them with some other statistical summary or threshold,” wrote Columbia University statistician Andrew Gelman, “but rather to move toward a greater acceptance of uncertainty and embracing of variation.” (Figure 6.)

statistical significance in medical research.

“If there’s one takeaway from the ASA statement, it’s that p-values are not badges of truth and P≤0.05 is not a line that separates real results from false ones. They’re simply one piece of a puzzle that should be considered in the context of other evidence,” wrote Ms. Aschwanden in her FiveThirtyEight.com article.